|

|

||

|---|---|---|

| backend | ||

| config | ||

| frontend | ||

| gephi | ||

| .gitignore | ||

| docker-compose.production.yml | ||

| docker-compose.yml | ||

| example.env | ||

| LICENSE | ||

| README.md | ||

| screenshot.png | ||

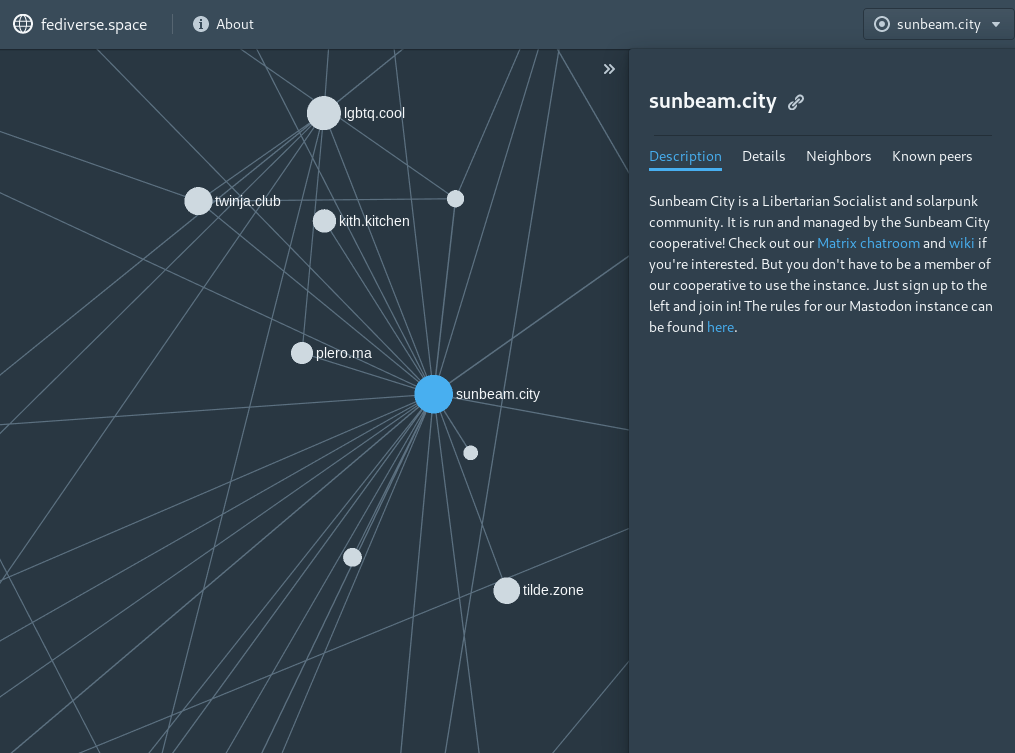

fediverse.space 🌐

The map of the fediverse that you always wanted.

Requirements

- For everything:

- Docker

- Docker-compose

- For the scraper + API:

- Python 3

- For laying out the graph:

- Java

- For the frontend:

- Yarn

Running it

Backend

cp example.env .envand modify environment variables as requireddocker-compose builddocker-compose up -d django- if you don't specify

django, it'll also startgephiwhich should only be run as a regular one-off job - to run in production, run

caddyrather thandjango

- if you don't specify

Frontend

cd frontend && yarn installyarn start

Commands

Backend

After running the backend in Docker:

docker-compose exec web python manage.py scrapescrapes the fediverse- It only scrapes instances that have not been scraped in the last 24 hours.

- By default, it'll only scrape 50 instances in one go. If you want to scrape everything, pass the

--allflag.

docker-compose exec web python manage.py build_edgesaggregates this information into edges with weightsdocker-compose run gephi java -Xmx1g -jar build/libs/graphBuilder.jarlays out the graph

To run in production, use docker-compose -f docker-compose.yml -f docker-compose.production.yml instead of just docker-compose.

An example crontab:

# crawl 50 stale instances (plus any newly discovered instances from them)

# the -T flag is important; without it, docker-compose will allocate a tty to the process

15,45 * * * * docker-compose -f docker-compose.yml -f docker-compose.production.yml exec -T django python manage.py scrape

# build the edges based on how much users interact

15 3 * * * docker-compose -f docker-compose.yml -f docker-compose.production.yml exec -T django python manage.py build_edges

# layout the graph

20 3 * * * docker-compose -f docker-compose.yml -f docker-compose.production.yml run gephi java -Xmx1g -jar build/libs/graphBuilder.jar

Frontend

yarn buildto create an optimized build for deployment